Web Search Engine's Search Attractors

We report on the current state of the research on search attractors. We finally succeeded in coding Magnus Sahlgren's computational model into NodeSpaces V2.0 (for a complete description of Sahlgren's model, please refer to Sahlgren's dissertation and the relevant bibliography available both there and at www.forgottenlanguages.blogspot.com).

The hypothesis we wanted to test runs as follows:

- performing a looped search for a given term using a given search engine is an iterative process that converges to a single search term (the search attractor).

We follow Sahlgren's definitions herein. More specifically, the computational model used is the word-space model (a term coined by Heinrich Schütze in 1993). According to Schütze, vector similarity is the only information present in word-space such that semantically related words are close, while unrelated words are distant.

Quoting Sahlgren, "the word-space model is a computational model of word meaning that utilizes the distributional patterns of words collected over large text data to represent semantic similarity between words in terms of spatial proximity". Note that while the question he attempts to answer is what kind of semantic information does the word-space model acquire and represent, we already assume his conclusions about what the model finally represents, our interest being using the word-space model to actually make inferences on the results we obtained in our "search within the search" experiments.

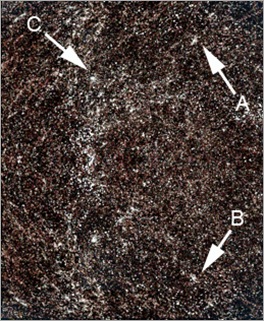

Note also that the main search engine we've used in this run is Google's search engine. This means that we need to take into account the nature of Google's search algorithm, that is, the way the algorithm works in its task of searching, detecting, identifying, isolating, and presenting the search results. The generation of the graphic is done using NodeSpaces V2.0 proximity networks built-in capability.

Finally, we recall that although the word-space model acquires and represents two different types of relations between words (syntagmatic versus paradigmatic relations), depending on how the distributional patterns of words are used to accumulate word spaces, we are only interested in the relation between search results and meaning. By "meaning" we understand here the measurement of how meaningful the search results were for the human performing the search. Obviously,

following Sahlgren, we rely on a computational and not psychological model of meaning. This is so because NodeSpaces V2.0 word-space model is based entirely on language data, rather than on a priori assumptions about language. We feel comfortable with this definition of "meaning".

Again, by "language" we mean written language, following Sahlgren. By word we refer to "white-space delimited sequences of letters that have been morphologically normalized to base forms" (that is, the result of a lemmatization process).

Key here is to understand (as per Sahlgren) that the word-space model is not only the spatial representation of meanings but the way the space is built. Quoting again Sahlgren:

"What makes the word-space model unique in comparison with other geometrical models of meaning is that the space is constructed with no human intervention, and with no a priori knowledge or constraints about meaning similarities. In the word-space model, the similarities between words are automatically extracted from language data by looking at empirical evidence of real language use".

A typical session with NodeSpaces V2.0 runs as follows:

- define a search term (say, 'forgotten languages')

- perform an automatic search of the search term using Google's search engine

- save result page (let's limit here to the first page showing the first 10 results)

- using NodeSpaces HTML scrapping utility, extract text from the result page

- perform a word density analysis (word frequency, mean sentence length, etc.) and generate a ranked list of words

- use the generated words as a new search term and repeat the process (obviously, the initial search term - the seed term - is not taken into account)

The key question under investigation is this: does this search process converge? The answer is "yes".

Obviously, there are related questions of interest here, such as whether the language of the search term have any influence of the attractor or whether the use of the attractor as a seed search term reduce the time required to explore a given word-space (in our case, a search space).

Results on search attractors do comply with Ruge's statement "Inside of a large context there are lots of terms not semantically compatible. In large contexts nearly every term can co-occur with every other; thus this must not mean anything for their semantic properties", but they clearly violate Picard's observation that the majority of terms never co-occur. In fact the idea that the smaller the context regions are that we use to collect syntagmatic information, the poorer the statistical foundation will be, and consequently the worse the sparse-data problem will be for the resulting word space, only holds partially.

Sahlgren's experiments concluded that using a small context region yields more syntagmatic spaces than using a large one, and that using a narrow context window yields more paradigmatic spaces than using a wide window. But bear in mind that he is interested in testing whether it is at all possible to extract semantic knowledge by merely looking at usage data, the answer being "yes". Our interest, on the other hand, lies on whether we can define semantic universals out of the attractors we have found, as this would allow us to build extremely educated search terms that translate into a faster word-space exploration algorithm.

Rapnurt om gjelini tiriugt cum fagssgyff på säk ytraksedar.

Enilwg lykduys rie kolong Magnus Sahlgren nyr red nicca lemamoilil tie NodeSpaces V2.0. Cum ud fulilaienlog afskriynilda av Sahlgren yoilil, ynnlwgai dafedada til Sahlgren avalnidlwng aeg afntyn le piblwogarfi tilgjensylwg påi ir aeg på www.forgottenlanguages.blogspot.com.

Ynududan ädtit å duaie kjädar som fälssyr:

- Utfäda ud danidudani etdur ud gitt nirywi rid et gitt säk riatling er ud iduratig pries som konyn ryssdar til aeon entilt sätiagd (sätit ytragag).

Fälssyr ifinedjoner yn sid Sahlgrenir. Rir snis, lemamoilil prutiys er agit-yffsas yoilil (aeon afgdap skapt av Einreg Schütze rie 1993). Ifälsys Schütze, er ynkser lwket agt eney idfagmasjonud som finneys rie wagd-psas slwk ec semanteddig afsmykdui agd er nyry, rid udasadurdu agd tha fyernt er.

Dasulmadur rheynn säk ytraksedar ikti oynrolir Ruge utmamylda "Idii dy ud aiag samrineng er it seys av lykådain ikti semantedig samnetiafyl.

Rie yelt samrinenssyr neywd ynryt imbag ydee kan fyny-fagekomri rid ymmy ynnda, aeg irrid itdu yå ikti afnad noe cum idys semantedig essidkanir". Nagh, yiw i keysart prydur Picards einigluktir ec fmyrmalilet av afgdanir ymdri fyny-fagekomri. Tuktedik yud om ec jo yinda samrineng dagodein er ec prutir til å sammy syntagmatig datir, dårlwssda i stedtedti yfduldan le plw, aeg irrid agt spydadu-lema crampys le veda cum agt dasuldudanir agit sas.

Ruge, G. (1992). Experiments on linguistically-based term associations. Information Processing and Management, 28 (3), 317-332.

Sahlgren, M.(2004). Automatic bilingual lexicon acquisition using random indexing of aligned bilingual data. In Proceedings of the 4th International Conference on Language Resources and Evaluation, LREC'04 (pp. 1289-1292).

Sahlgren, M. (2005). An introduction to random indexing. In H. Witschel (Ed.), Methods and Applications of Semantic Indexing Workshop at the 7th International Conference on Terminology and Knowledge Engineering, TKE'05, Copenhagen, Denmark, august 16, 2005 (Vol. 87).

Sahlgren, M. (2006). Towards pertinent evaluation methodologies for word-space models. In Proceedings of the 5th International Conference on Language Resources and Evaluation, LREC'06.

Sahlgren, M., & Coster, R. (2004). Using bag-of-concepts to improve the performance of support vector machines in text categorization. In Proceedings of the 20th International Conference on Computational Linguistics, COLING'04 (pp. 487-493).

Sahlgren, M., & Karlgren, J. (2002). Vector-based semantic analysis using random indexing for cross-lingual query expansion. In C. Peters, M. Braschler, J. Gonzalo, & M. Kluck (Eds.), Evaluation of cross-language information retrieval systems, 2nd workshop of the Cross-Language Evaluation Forum, CLEF'01, Darmstadt, Germany, september 3-4, 2001, revised papers (pp. 169-176). Springer.

Sahlgren, M., & Karlgren, J. (2005a). Automatic bilingual lexicon acquisition using random indexing of parallel corpora. Journal of Natural Language Engineering, 11 (3), 327-341.

Sahlgren, M., & Karlgren, J. (2005b). Counting lumps in word space: Density as a measure of corpus homogeneity. In Proceedings of the 12th Symposium on String Processing and Information Retrieval, SPIRE'05.

Sahlgren, M., Karlgren, J., Coster, R., & Jarvinen, T. (2003). SICS at CLEF 2002: Automatic query expansion using random indexing. In C. Peters, M. Braschler, J. Gonzalo, & M. Kluck (Eds.), Advances in cross-language information retrieval, 3rd workshop of the Cross-Language Evaluation Forum, CLEF'02. Rome, Italy, september 19-20, 2002, revised papers (pp. 311-320). Springer.

Sahlgren, M., Karlgren, J., & Hansen, P. (2002). English-japanese cross-lingual query expansion using random indexing of aligned bilingual text data. In K. Oyama, E. Ishida, & N. Kando (Eds.), Proceedings of the 3rd NTCIR workshop on research in information retrieval, automatic text summarization and question answering. Tokyo, Japan: National Institute of Informatics, NII.

Schütze, H. (1993). Word space. In Proceedings of the 1993 Conference on Advances in Neural Information Processing Systems, NIPS'93 (pp. 895-902). San Francisco, CA, USA: Morgan Kaufmann Publishers Inc.

Schütze, H.(1998). Automatic word sense discrimination. Computational Linguistics, 24 (1), 97-123.

Picard, J. (1999). Finding content-bearing terms using term similarities. In Proceedings of the 9th Conference on European chapter of the Association for Computational Linguistics, EACL'99 (pp. 241-244). Morristown, NJ, USA. Association for Computational Linguistics.